Yu Yao is a Fourth year PhD student in Robotcs at the A2sys Lab, University of Michigan. His research interests include deep learning, bayesian learning, and computer vision. He is specifically focusing on vision-based prediction and spatio-temporal event detection and the applications in intelligent transportation systems (e.g. autonomous vehicles). He is also interested in advanced Artificial Intelligence topics such as game theory and deep reinforcement learning.

Ph.D. in Robotics, 2020

University of Michigan

M.S. in Robotics, 2017

University of Michigan

BEng in Aerospace Engineering, 2015

Beijing Institute of Technology

Nov 2, 2019, 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)

Jun 17, 2019, The 4th Workshop on Egocentric, Perception, Interaction and Computing @CVPR2019

May 22, 2019, 2019 IEEE International Conference on Robotics and Automation (ICRA)

Apr 28, 2019, 3rd IAVSD Workshop on Dynamics of Road Vehicles in Connected and Automated Vehicles

Jul 27, 2017, AIAA Intelligent Systems Workshop

We are proposing an unsupervised/weakly-supervised approach for on-road anomaly detection from first-person videos and anomalous object localization.

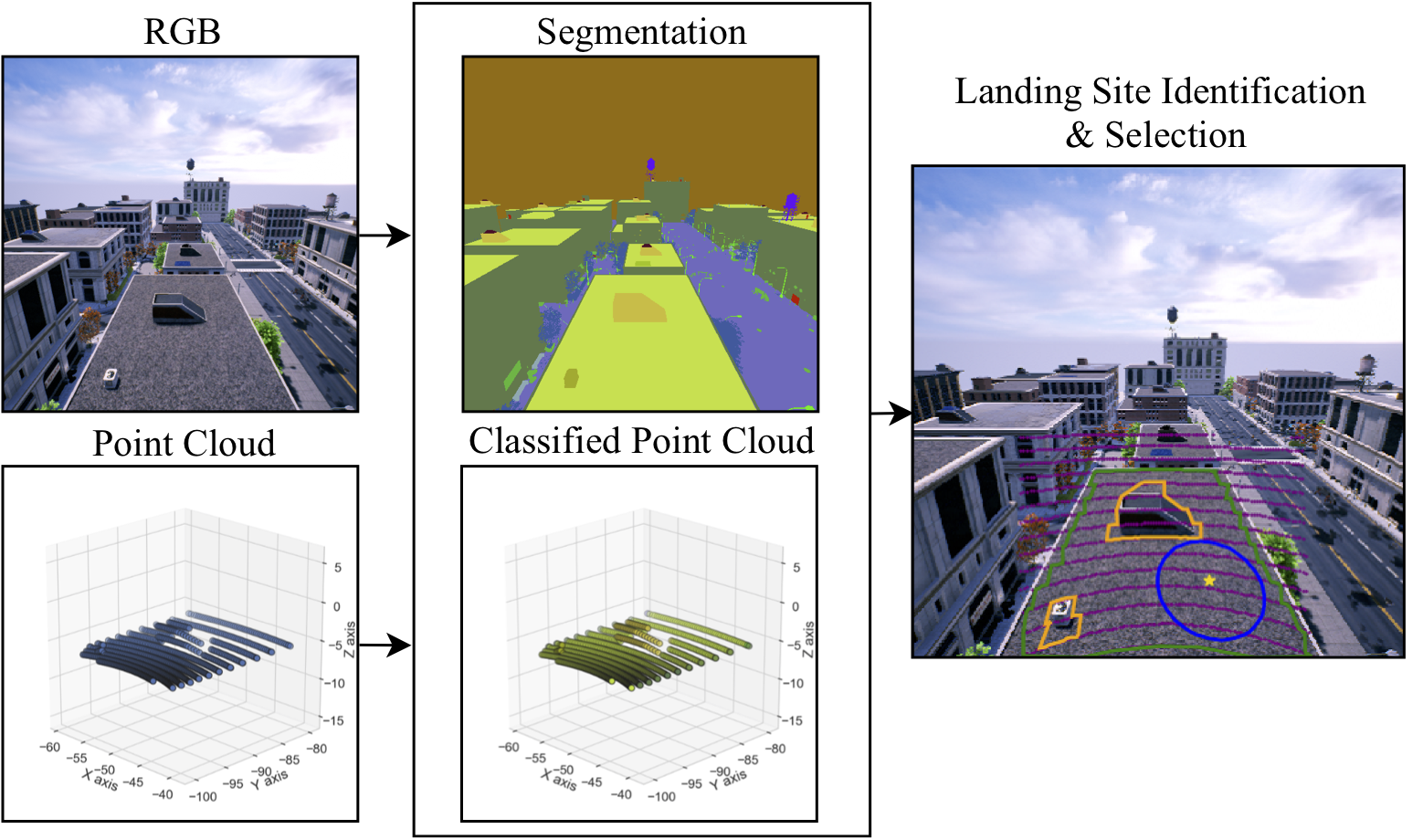

We are proposing a real-time rooftop landing site identification method by poly-lidar, image semantic segmentation and lidar-camera data fusion. HIL test has been executed with NVIDIA Jetson TX2

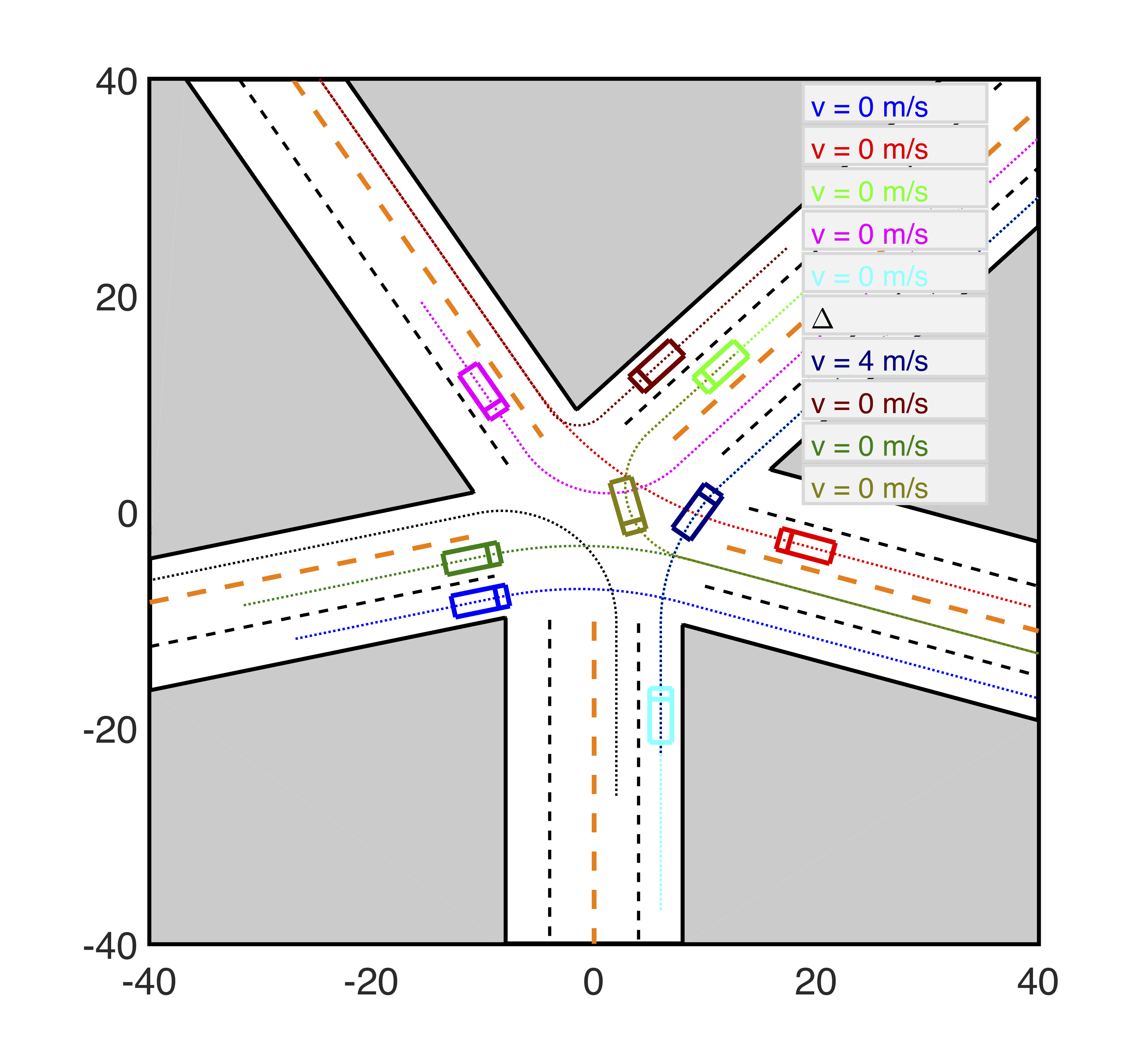

We are proposing a pair-wise leader-follower model for autonomous driving at uncontrolled intersections scenarios

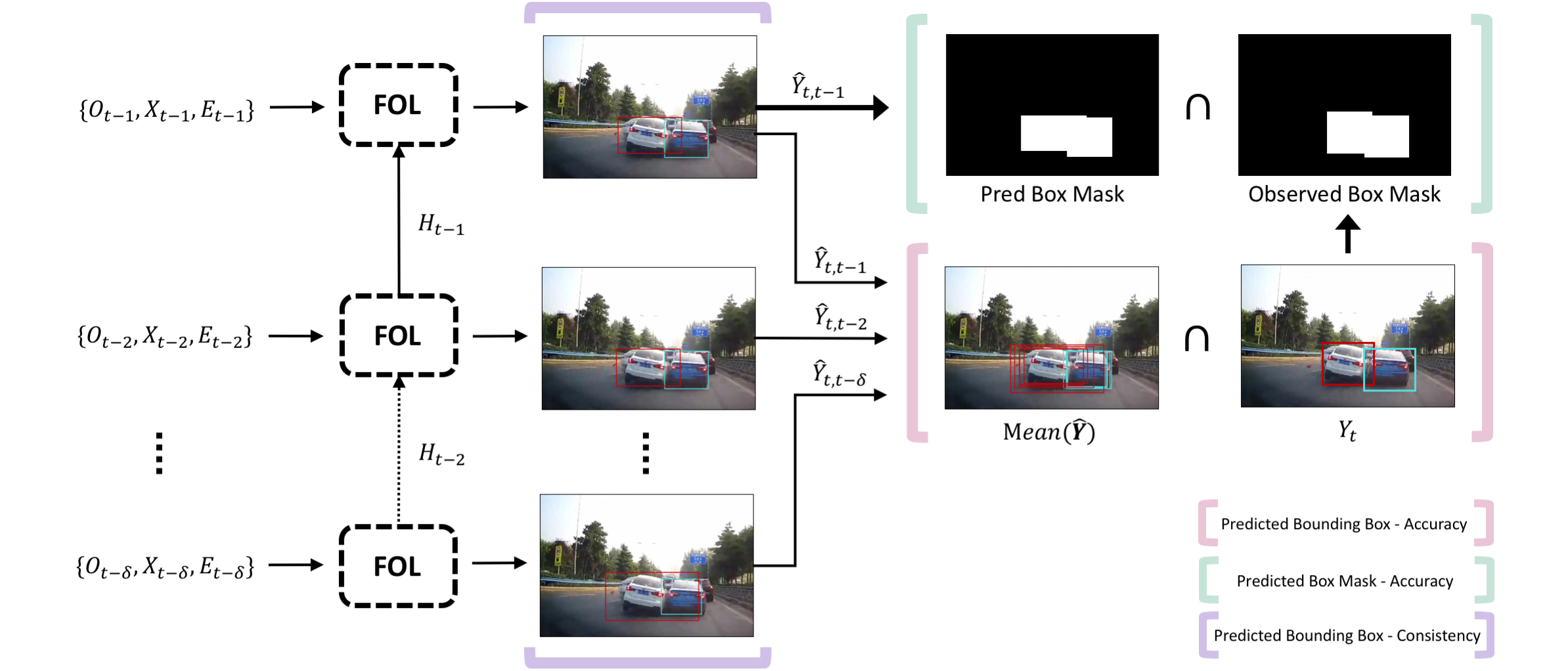

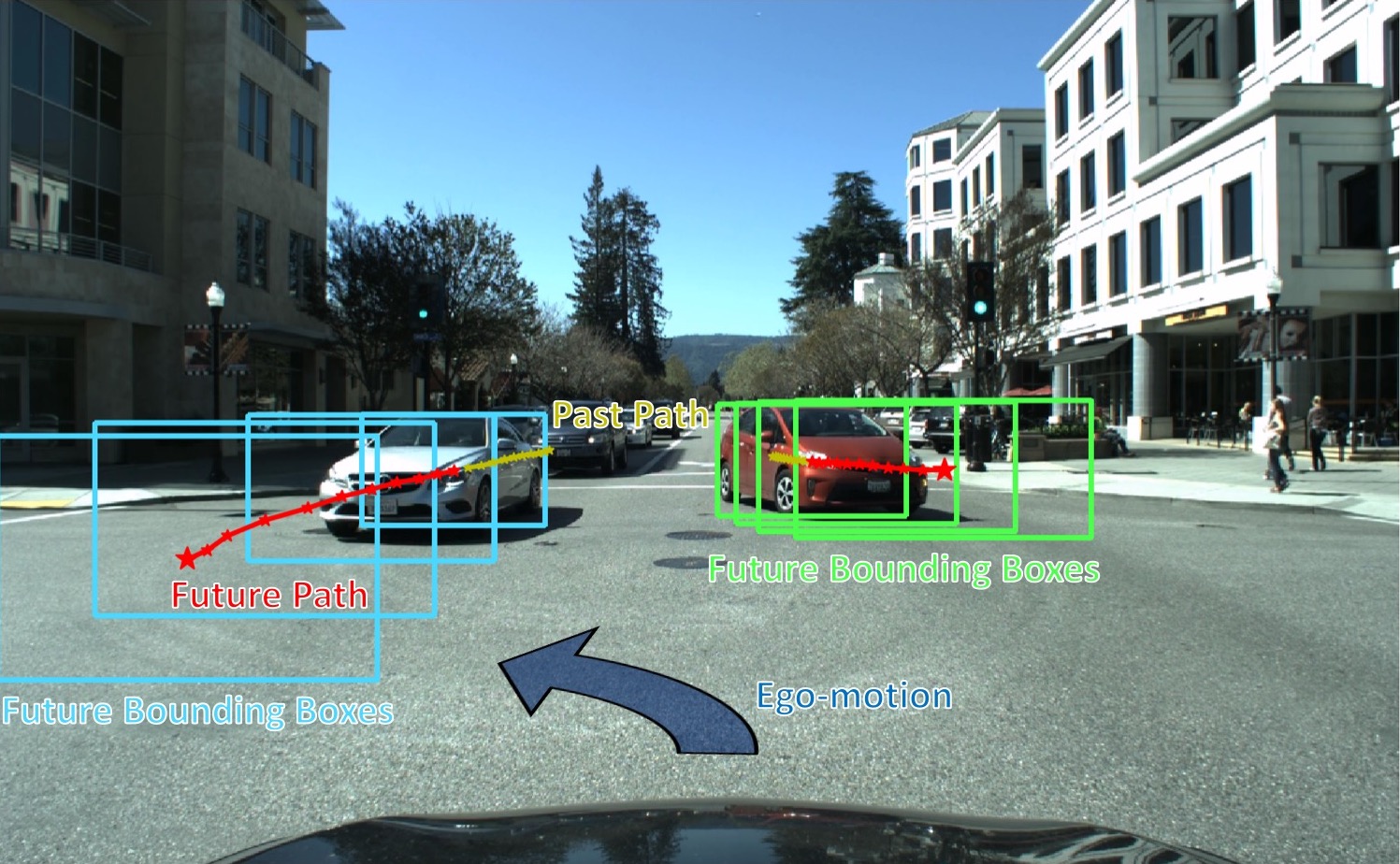

We are investigating future on-road object localization through modeling their appearance, motion, as well as their interactions with participants and scenes.

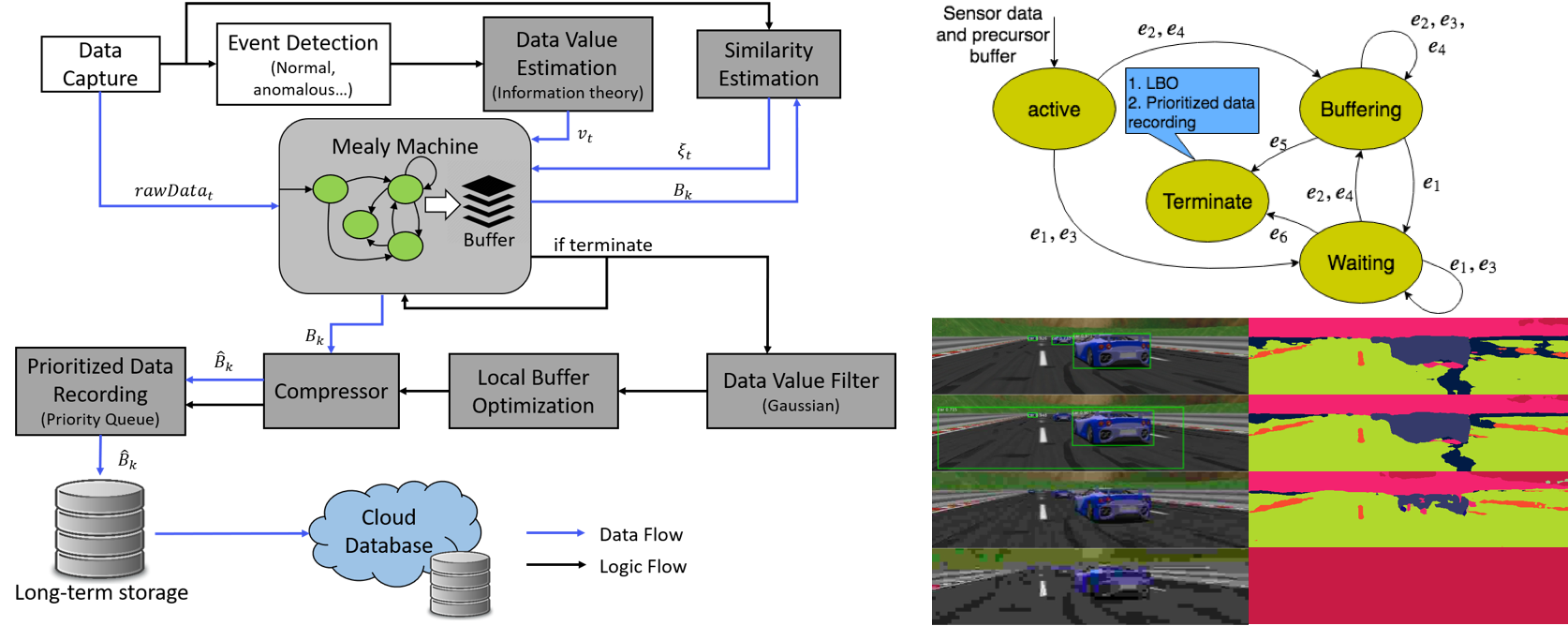

We are proposing a value-driven evernt data recorder for advanced and autonomous vehicles, called the Smart Black Box.

The overall project goal was to identify and track another small UAS that sporadically flew into and across the field of view.

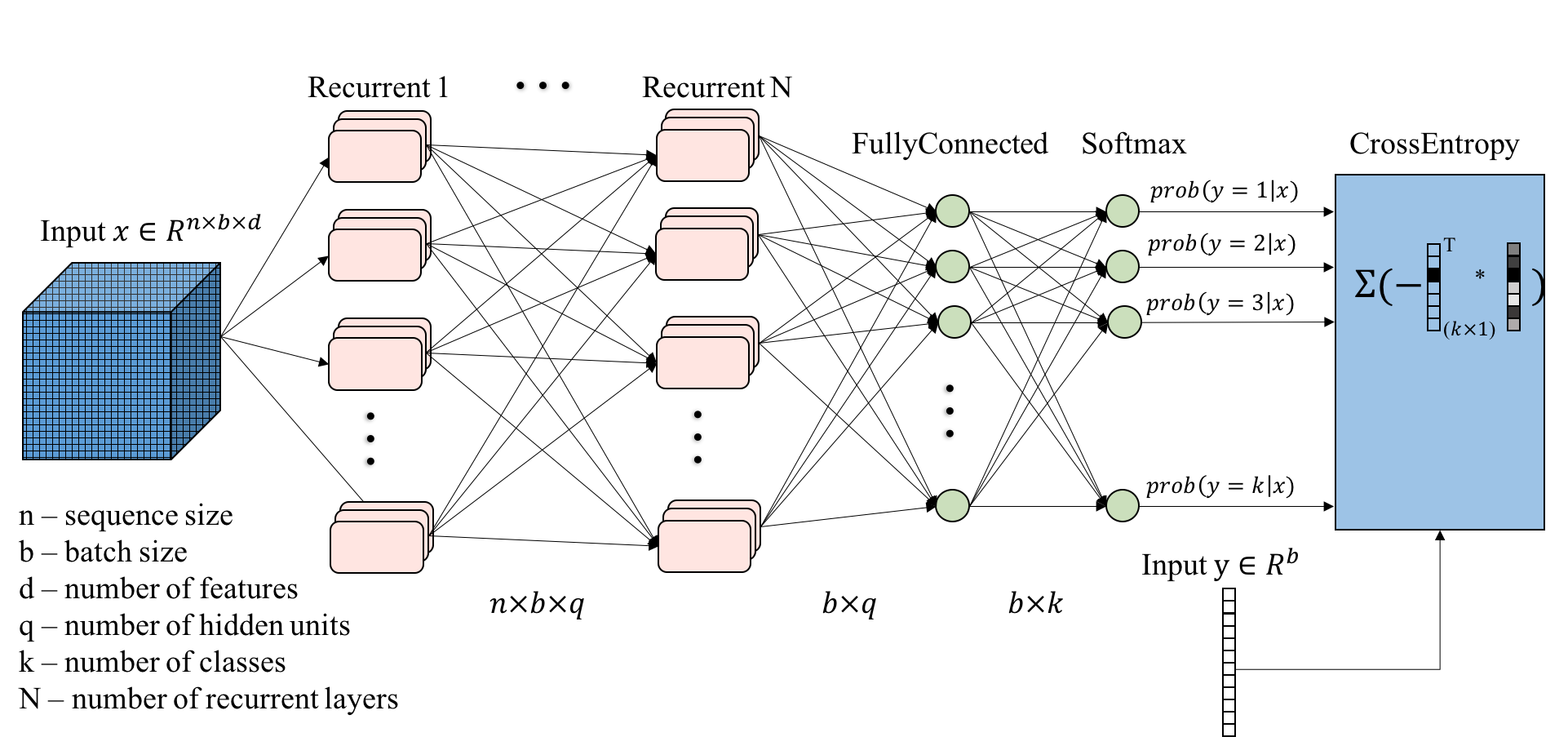

This project proposes a promising human activity recognition approach based on long-short term memory (LSTM) method using the smartphone inertial sensor data.

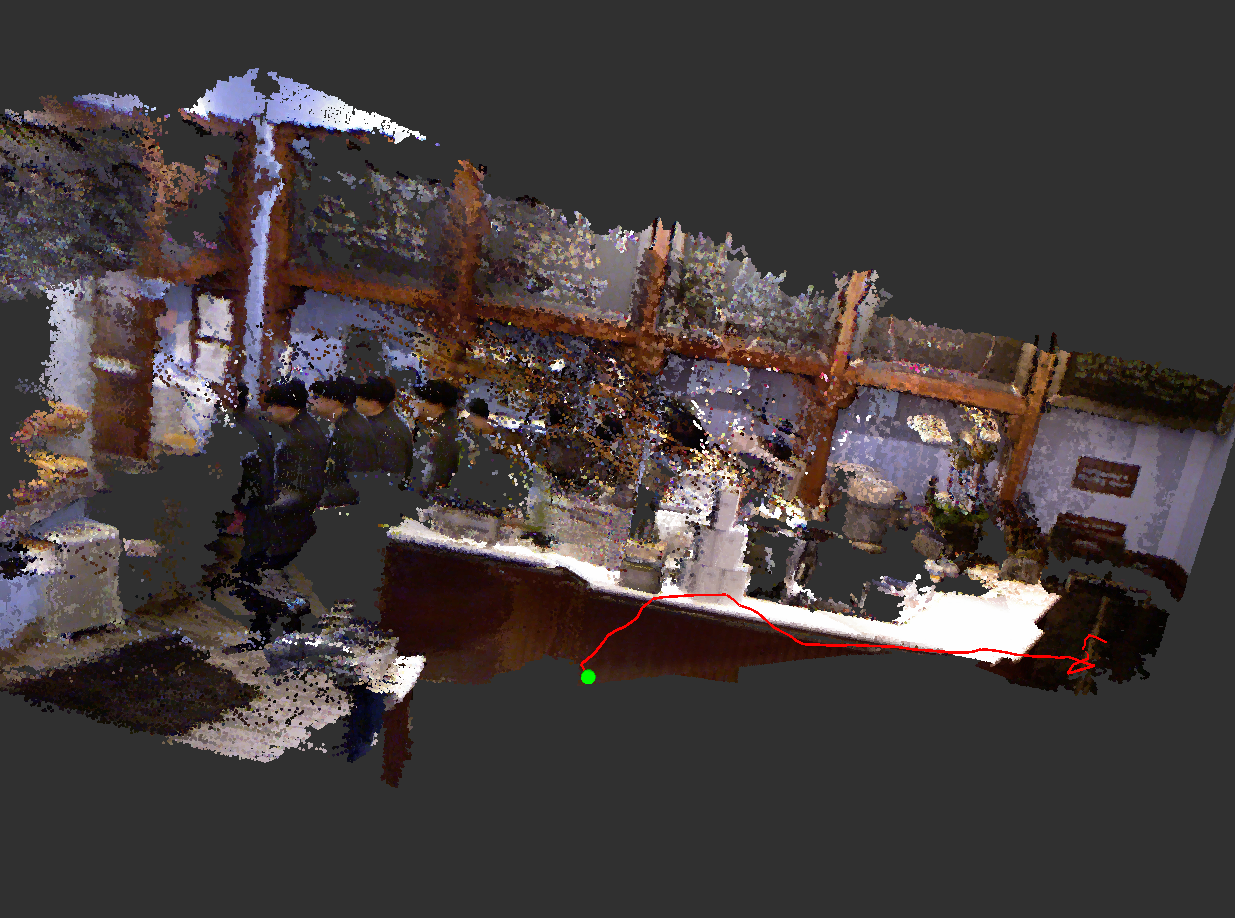

A simultaneous localization and mapping (SLAM) project using RGB-D data from a Microsoft Kinect.

I’ve takeing following courses in University of Michigan: